Feature

What you get

is not just

what you see

Scientists have built a novel AI system that

rewrites the rules for computer vision.

It might soon turn neuroscience on its head.

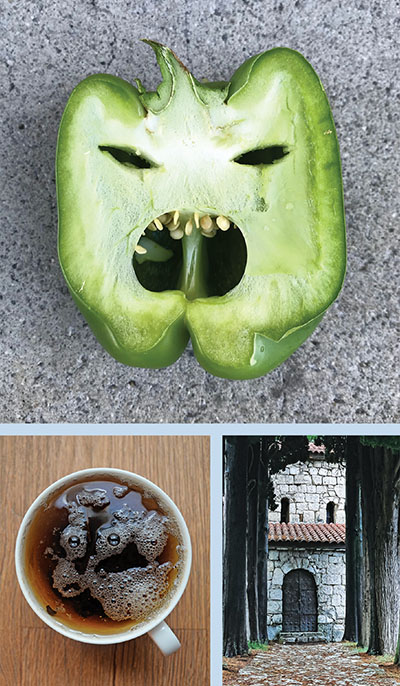

By Bahar GholipourThe picture is unmistakable: a pepper sliced in half. Yet when Winrich Freiwald projects it on the big screen during a recent lecture, soft giggles erupt from the audience.

Because while all that’s there is one half of a vegetable, it’s nigh impossible not to see something else—a spooky green face, with holes for eyes and seeds for teeth, staring anxiously ahead. “We know full well it’s a pepper,” Freiwald says, his long legs pacing the stage of Rockefeller’s Caspary Auditorium. “But we cannot help seeing the face.”

It’s not our fault; our brains come equipped with a neural machinery whose sole task is to perceive and recognize faces. This internal face detector never rests—every time certain complex patterns hit the retina, it gets activated (see “Why there’s a man in the moon,” below). To a neuroscientist, the phenomenon is not just comical but consequential. Pepper faces, along with a host of similar illusions, illustrate profound mysteries about the brain and its relationship to the world around us. Vision may be the best understood of the brain’s functions, yet we seem to have misunderstood something about the way our minds derive meaning from visual inputs.

If the brain is simply processing incoming cues, how does it quickly turn ambiguous data into coherent representations of objects and scenes?

“Examples like this suggest that when we see something, the brain is doing a lot more than just registering light,” Freiwald says, referring to the textbook description of how we see: Light bounces off an object, hits the retina, zooms along the optic nerve, and, voila—electric signals are transformed by the brain into a teacup. For one thing, this canonical understanding of the visual system doesn’t account for the fact that a pepper seed isn’t always a pepper seed but can register as a tooth under certain circumstances. And if the brain is simply processing incoming cues, how does it quickly turn ambiguous data into coherent representations of objects and scenes, like when you recognize your grandmother’s cheerful face in a blurry, old photograph?

Freiwald is among a growing circle of scientists turning to a radically different view, one that posits that what we see isn’t merely a reflection of what’s out there. It is more akin to a mental construct, something cognitive scientists call inference. “We think the brain has some kind of internal component that not only detects incoming stimuli but also generates them,” he explains. “In a manner of speaking, the brain is constantly hallucinating.”

13Number of milliseconds it takes for the human brain to see an image.

A few years ago, Freiwald teamed up with computational cognitive scientists Joshua B. Tenenbaum and Ilker Yildirim, who concocted an idea for a system to test this generative theory of vision. Together, the scientists set out to build a new kind of artificial intelligence to explore whether the process by which we recognize faces or other objects starts in the brain itself. Among the things they wanted to know was whether a machine could be programmed to match observations made in biological experiments. If it could, there would be far-reaching implications for neuroscience. And it gradually became clear that their work might have ripple effects: Machines that don’t just think faster than us but also, on a cognitive level, behave more like us could help propel advances in everything from developing safer autonomous vehicles to slowing climate change.

But much would depend on what the scientists learned.

Faces are an elite category of human perception. They are among the first things we learn to look at as infants, and as we grow older, our social functioning relies heavily on the ability to recognize family members, friends, and foes and read the facial expressions of people we interact with. This may be why humans and other primates have evolved specialized brain cells just to recognize faces. “It’s a notably inefficient use of neurons,” Freiwald says. So much so that when he first heard about this phenomenon during his graduate studies, he rejected the notion. “I thought, that’s not an elegant solution for the brain,” he says. “To have neurons that respond only to one object category and not others? That is odd.”

Even Charles G. Gross, a cognitive neuroscientist at Princeton University who first discovered face neurons in the 1970s, was baffled. It took another two decades before MIT neuroscientist Nancy Kanwisher identified the fusiform face area, a region in the brain’s inferior temporal cortex that is specialized for face recognition. Freiwald trained in Kanwisher’s lab as a postdoc, then joined Margaret Livingstone at Harvard Medical School, where he worked with then colleague Doris Tsao to combine brain imaging studies with recordings of individual neurons. The scientists ultimately uncovered a network of six pea-sized patches composed almost entirely of face neurons.

Since then, Freiwald has been able to characterize these patches in great detail. Among his lab’s findings is that each patch processes a different dimension of facial information. In one of the first patches to get activated, for example, neurons are sensitive to facial features, such as the distance between a person’s eyes. In one of the middle patches, neurons code for orientation—some favor right-side profiles; others half profile. And neurons in the last patch respond to faces as a whole, no matter their orientation.

Having deciphered the functions of the face patches, Freiwald was able to chart the itinerary of a face as it travels through the mind, transforming from visual input into recognized object. And along the way, he saw things he wasn’t able to explain.

In one set of experiments, Freiwald’s team showed macaque monkeys renderings of human faces seen from various angles while monitoring neuronal activity within the face patches. In one of the middle patches, neurons responded differently to pictures of the same face seen at different angles, as the scientists had expected. But there was one bizarre exception: When the monkeys saw mirror-reflected poses—say, one picture of a face turned 45 degrees left from center and another with the same face turned 45 degrees in the opposite direction—the neurons responded as if the two pictures had been identical.

This mirror-symmetry effect was a mystery. In real life, faces don’t suddenly jump from left to right; they rotate from one pose to the other. Freiwald and his colleagues couldn’t explain, at least not within the conventional framework for how vision works, why the neurons were programmed for mirror symmetry. Had we gotten something fundamentally wrong about how the brain is wired?

“What I cannot create, I do not understand,” the theoretical physicist Richard P. Feynman famously said. And for cognitive neuroscientists, one way to understand how the brain operates is to create AI systems that emulate its computational principles.

What is common sense, scientifically speaking? What is that crucial thing that most of us can relate to yet struggle to define?

An auspicious encounter presented Freiwald with the opportunity to do just that. In 2013, he arrived at the newly launched Center for Brains, Minds, and Machines, a multi-institutional forum located at MIT that brings together scientists working on biological and artificial intelligence. It was there that he first met and began collaborating with Tenenbaum, a computational cognitive scientist at MIT whose work focuses on understanding how the brain makes inferences from sensory data, and Yildirim, a postdoctoral researcher co-mentored by Freiwald and Tenenbaum now on the faculty at Yale University.

Together, the three scientists began imagining a new kind of AI that could be trained to recognize faces. A cousin to the system that unlocks smartphones, theirs would be able to make inferences and thus generate new data in addition to processing incoming pixels. If successful, it would provide an experimental system for studying some of the most elusive aspects of being human, like how we effortlessly arrive at our commonsense understanding of the world, so rich in detail and meaning, when all we have to go on are visual cues that often contain a bare minimum of information.

Or, as Tenenbaum once put it: “How do humans get so much from so little?”

AI has begun saturating into our lives. It proofreads our emails, curates our social media feeds, and checks our credit cards for fraudulent activity. Yet that is nothing compared with what the technology promises to do in the future: write newspaper articles, tutor students, diagnose diseases.

In fact, there are already computer-vision machines that outperform doctors in detecting and classifying skin cancer. Like many other tech marvels—Bing Chat, deepfakes, Google Translate—they rely on deep neural networks, or deep nets, AI systems designed to operate like the networks of neurons in the human brain. Generally, the deep nets used in computer vision reflect the conventional understanding of human vision, consisting of an input layer and an output layer with more interconnected layers in between. Like human toddlers, these systems can be trained to recognize objects by essentially being told what they’re looking at, and they continuously recalibrate internal connections until they’re able to correctly associate patterns in the data with the right answer.

More than meets the eye

Most of the time, our eyes and brain work together seamlessly to process a pretty clear picture of reality. But the mechanics of our visual equipment can be tricked by scientists, unusual weather, or even our own preconceived notions. The resulting illusions lay bare the machinery operating just below consciousness. Here are four examples of when seeing should not be believed.

Once trained, a deep net can be unleashed to classify an input it hasn’t seen before. If it sees a sycamore tree for the first time, for example, it may have seen enough other tree species to correctly identify the sycamore as a tree. An untrained system, on the other hand, might classify the sycamore as broccoli.

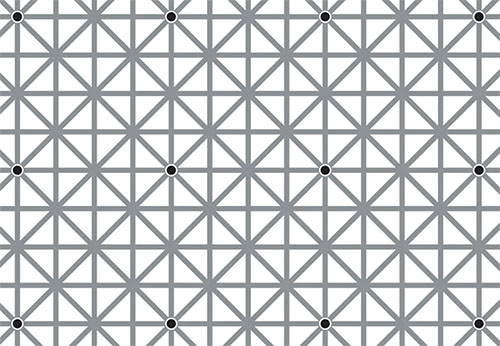

But even the most advanced deep net behaves differently than the brain in several important ways. For example, it may require thousands of hours of training with millions of examples before it can accurately distinguish trees from broccoli, while a human toddler easily learns to categorize these objects after seeing just a few examples. Moreover, even after extensive training the system will occasionally make errors that no human would make, like mistaking a squirrel for a sea lion. And just a little bit of visual noise, which humans will easily ignore or not even notice, can break down the AI entirely. (To be fair, humans make mistakes, too, but ours are very different in nature. We fall for optical illusions that AI is completely insensitive to, like when we lose our way trying to trace the path of an impossible staircase in an M.C. Escher print, for example.)

“Clearly, our conventional deep nets are missing something crucial,” Freiwald says. “They seem to lack common sense.”

But what is common sense, scientifically speaking? What is that crucial thing that most of us can relate to yet struggle to define? Important clues may lie in the generative mechanism that Freiwald and others find in the seeing brain: It isn’t merely recording what’s “out there,” as most computer-vision systems are trained to do, but also dreaming or hallucinating (in a healthy way) to proactively produce our perception of the world.

The concept of vision as a generative process best surfaces when it produces the wrong answer. Think of a time when you momentarily misperceived an object for another—maybe a hose on the ground made you think you were about to step on a snake, for example. For a split second, your brain conjured a distinctive image of the snake—until you realized your mistake, at which point the slithery creature instantaneously transformed into a harmless piece of plastic tubing. But where did that snake come from in the first place?

The 19th-century polymath Hermann von Helmholtz was among the first to point out the neuroscientific significance of such quotidian delusions. He recognized that when we see an object, the light that hits the retina is often less than ideal—the room might be dim, the object might be partially hidden from view, or a cacophony of other visual stimuli might be distracting us. How does the brain, almost always, correctly see through all that ambiguity? Helmholtz suggested that vision is a kind of inference in which the brain produces a hypothetical object—in much the same way it churns out images when we are dreaming—and then uses the actual sensory input to confirm its hypothesis.

According to this theory, the brain is much more creative than we’ve been giving it credit for. One step ahead at every turn, it doesn’t just process incoming information but also tries to deduce the causes behind it. When we see an object, the brain offers up its best guess about what we’re looking at (could that thing on the table be a teacup?). Then it collects incoming data to fact-check that inference (indeed, it is a bowl with a handle, just like other teacups I’ve seen before). What we actually perceive, then, is a dreamlike simulation of the object, originally produced by the brain and subsequently refined by sampling data received with the eyes.

1Smallest number of photons a human eye can detect, according to a 2017 Rockefeller study.

Many of Helmholtz’s contemporaries dismissed this idea, however. And although his inference theory gained some popularity among cognitive scientists in the late 20th century, it never quite took off, partly because scientists couldn’t reconcile an elaborate inference process with the breakneck speed of biological vision.

It wasn’t for lack of trying. Yildirim points to recent efforts to build a generative computer-vision system based on the inference approach. No matter how those systems were engineered, they required extensive iterative processing that took much longer than the 100–200 milliseconds the brain takes to perceive a detailed scene. “For both AI folks and neuroscientists, it has been unsettling that the process would be so cumbersome and slow,” he says. “No one believed that this could be the way the brain works, because our perception is nearly instantaneous.”

The team had an idea for how to create a supercharged, generative AI system. They essentially combined the best features of two approaches—the speed and processing power of established deep nets and the inference ability of a generative system—to build a new computer-vision machine dubbed the efficient inverse graphics network, or EIG. The goal was to use it as a model for the brain’s face-perception machinery, “arguably the best studied domain of high-level vision,” Yildirim says.

The brain may be much more creative than we’ve been giving it credit for. It doesn’t just process incoming information but also tries to deduce the causes behind it.

While deep nets are conventionally trained to classify objects, starting from pixels and working up, Yildirim and his colleagues put the EIG through a different educational program. They showed the machine 3D renderings of 200 human heads and taught it to detect the underlying image structure, breaking down the images into their basic components. The system’s deep net was able to do this because Yildirim had equipped it with an inverted version of a graphics processing unit, or GPU, a computer chip that enables the quick rendering of 3D graphics and animations for computer games, among other things. But instead of creating graphics, the deep net ran its GPU backward to deconstruct the renderings that it was shown.

To see whether the EIG would offer a more realistic model of human perception, all that remained was to test it.

It worked. When Yildirim showed the EIG a 2D image of a face it hadn’t encountered during training, the machine moved through its programming to arrive almost instantaneously at a 3D version of that same face. More important, its inside layers evidenced the same properties that Freiwald had spotted in the brain, including the mirror-symmetry effect. “The EIG mimicked the main stages of face processing and reproduced the physiology,” Freiwald says.

To the scientists’ delight, their AI system also mirrored human vision at the behavioral level. For example, it made humanlike mistakes when the researchers tested it on visual illusions like the hollow mask (see an example in the slider above). In the classic rendering of this illusion, a face appears to be rotating from right to left. When it reaches a half rotation, you realize you have been looking at the back of a mask—a concave rendering of a face. Yet as the face keeps rotating, the illusion resurfaces again and again; even though you know you’re looking at a hollow mask, you’ll continually revert to seeing a regular, convex face, as if your brain insists on interpreting the incoming information in the context of ordinary faces.

Whereas conventional AI doesn’t fall for the illusion, the EIG misperceived the concave mask in the same way we do. And a look at the machine’s inner workings confirmed that it had computed the incoming light erroneously. “It’s one line of evidence that the human brain is really doing the inference approach, very different from that of a conventional deep net,” Freiwald says.

2ndRank of the human eye in terms of the complexity of organs in the body. Only the brain tops it.

All of which suggests something truly remarkable about the brain, according to the researchers: When we see a face, a teacup, or anything else, our brain infuses the object with an interpretation, producing a richer bounty of data than the object itself provides. This inference might explain how we can learn so much about what we’re looking at so quickly, potentially providing a recipe for a crucial aspect of human intelligence, perhaps that common sense that AI systems famously lack.

“When you see a picture of Audrey Hepburn, you don’t just see a two-dimensional arrangement, you’re inferring how the face looks in 3D,” Freiwald says. “Yet that information is not really there in the picture itself. That we get more out of an image is a form of intelligence.”

The source of this intelligence, or what makes such inferences possible, can be thought of as knowledge structures embedded in our brains, that guide our perception, thinking, and actions. Such knowledge has likely formed partly during evolution and partly through early life experience, as when infants develop an understanding of gravity by dropping their sippy cup. Once we’ve figured out this basic law of physics, that knowledge stays with us and is called on each time we catch a falling dish.

“Our thinking is structured around a basic understanding of the world in terms of physical objects and entities, other humans and animals, and how they interact,” Tenenbaum recently said at a conference at Cold Spring Harbor Laboratory. Such phenomena are sometimes referred to as “intuitive theories.” Tenenbaum calls them our commonsense core.

“The EIG is taking us a step closer toward reverse-engineering the human brain,” says Yildirim, who is currently teaching the machine to move beyond faces—recognizing whole bodies, places, and even how physical objects move and react to external forces. “That means we’re also on a good course to ultimately advance the potential of AI.”

Computers are brilliant except when they are stupid. Notwithstanding the stunning advances in AI in recent years, progress in the field is now facing an impasse. Self-driving cars won’t be zooming down the roads anytime soon—not as long as they abruptly slam on the brakes when tricked by soap bubbles. And no home helper robot can come online until we can trust it won’t load the cat into the dishwasher. The future will tell whether these systems can become wiser by incorporating generative-processing capabilities like those of the EIG.

Yet to Freiwald, just as exciting is what the EIG and similar systems might do for neuroscience. “Building a machine that recognizes faces the same way a primate brain does is a huge milestone,” he says. “It shows us that we’ve correctly understood this aspect of the brain’s function and that we’ll be able to apply that knowledge to study the brain’s functions more generally.”

Because how we see a face tells us much about how we see the world, literally and figuratively. If perception is shaped by the brain, then it is fundamentally a cognitive act. And then the phenonenon of face processing, fascinating unto itself, becomes an entry point for exploring how neural processes translate into human nature: how the brain generates our thoughts, emotions, and behaviors and how we perceive others and adapt to a social environment. Moreover, this novel way of looking at the brain—as the active builder of our models of the world—provides new frameworks for studying the mechanisms behind autism spectrum disorders and mental illnesses such as bipolar disorder and schizophrenia.

“It’s still extremely early days,” Tenenbaum noted during the conference. “And that’s the most exciting time.”