Interview

Biomedical science in the age of AI

With supercomputers, algorithms, and troves of big data, the AI revolution is sparking new opportunities—and some challenges.

By Megan ScudellariOne of the greatest achievements in AI was inspired by the human brain itself. Starting in the 1980s, computer scientist Geoffrey Hinton and physicist John Hopfield developed artificial neural networks by training machines to process data using principles discovered in the visual system of the brain.

That work, for which Hinton and Hopfield received the 2024 Nobel Prize in Physics, set the foundation for today’s powerful machine learning, including AlphaFold, an artificial intelligence program that predicts 3D structures of proteins from their amino acid sequences. For creating such a transformative tool, AlphaFold’s developers, Demis Hassabis and John Jumper of Google DeepMind, also received Nobel Prizes in 2024, theirs in chemistry.

And so, from serving as biological inspiration to dramatically advancing the biomedical sciences, AI is coming full circle. With its rapidly improving ability to collect, classify, organize, and rationalize information, computers in some ways now surpass what people can do: identifying patterns, relating concepts, and drawing conclusions from massive amounts of data that human brains simply cannot process.

With these capabilities, the technology has begun transforming workflows and speeding discovery in biomedical labs, which constantly generate vast troves of scientific data through whole genome sequencing, digital imaging, single-cell genomics, and more. Researchers are now exploring the use of AI to generate hypotheses, automate experiments, and develop computational models.

In fact, academic research may be the ideal playground for AI tools, researchers suggest, since results are repeatedly tested and analyzed. So, AI in the lab may be less prone to errors or so-called hallucinations than other tools such as chatbots.

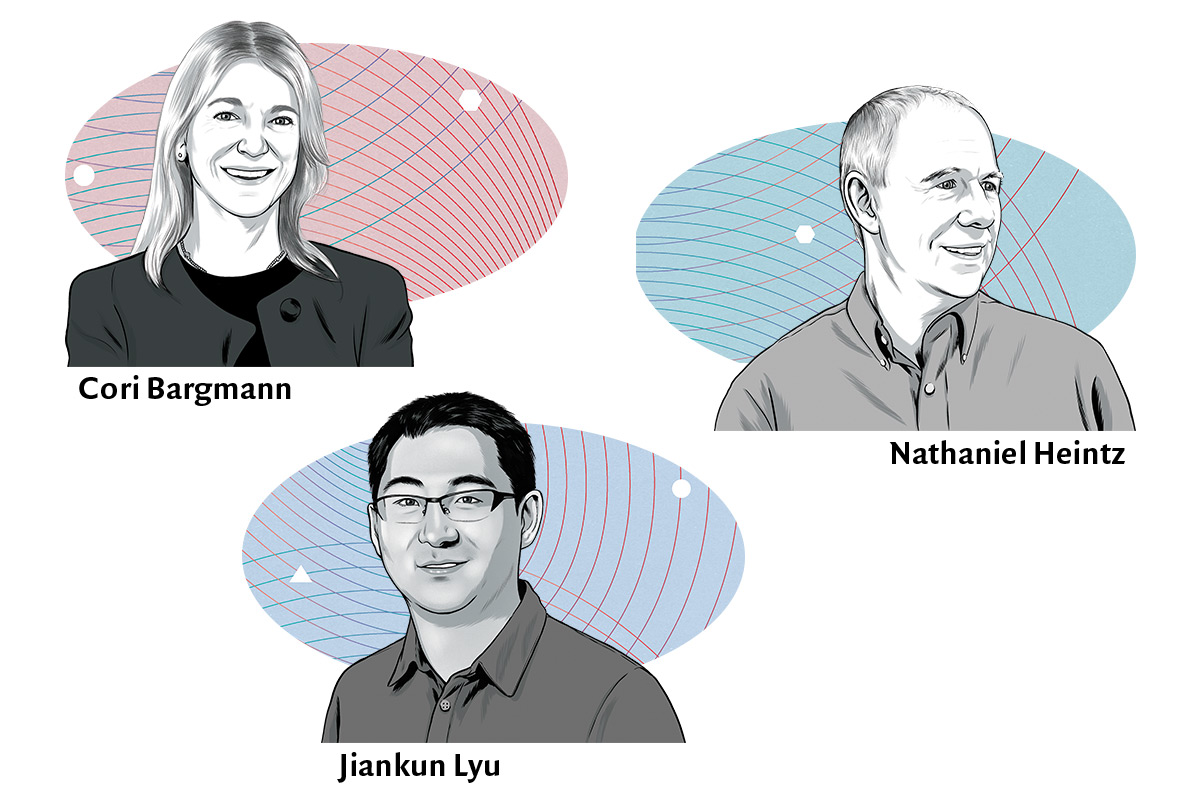

At Rockefeller, researchers are applying machine learning and signal processing to their projects in new ways while exploring questions about data quality, the limits of AI, and trustworthiness. We delved into conversation around these topics and more with three Rockefeller scientists currently employing AI in their work: neuroscientists Cori Bargmann and Nathaniel Heintz and biochemist Jiankun Lyu.

Bargmann is the Torsten N. Wiesel Professor and head of the Lulu and Anthony Wang Laboratory of Neural Circuits and Behavior at Rockefeller and the former head of science at the Chan Zuckerberg Initiative. She studies the neuronal basis of behavior in C. elegans. Heintz is Rockefeller’s James and Marilyn Simons Professor and director of the university’s Zachary and Elizabeth M. Fisher Center for Research on Alzheimer’s Disease. His work explores the genes, circuits, and cells that contribute to mammalian brain function and dysfunction. Lyu is an assistant professor and head of the Evnin Family Laboratory of Computational Molecular Discovery, where he develops computational methods to screen ultra-large digital libraries for small molecules that can help interrogate biological targets.

Give us the big picture. How is AI changing research in the life sciences?

CB: There are two major ways in which AI is making things possible at Rockefeller that were extremely difficult before. One is the ability to bring data together at scale. AI has the ability to analyze and find patterns in very large, very complex multimodal datasets. As biologists, we’ve been very good at studying one molecule at a time, but AI methods enable us to look at complex biological systems and pull out all kinds of information flowing through those systems. For example, in neuroscience this gives us the ability to examine the dynamics and interactions of many cells in the brain.

The second advance is in the area of machine vision, which is image processing to identify objects and follow them over time. Whenever you are looking at a complex image, a highly trained individual might find things one at a time. Machine learning models can do it much faster, as they can quickly process a lot more information. These methods are being applied to everything from finding specific proteins in complex electron micrographs to tracking fast-moving mosquitoes when they find a host.

JL: AI is changing work in many ways, even in small things. For instance, chatbots are becoming competent at programming, so if a researcher wants to write a simple script, these chatbots can quickly do it well. For scientists who used to spend a lot of time writing programs, especially experimentalists without a lot of experience programming, these tools can really expedite their work.

For example, a machine-learning-based protein structure search tool called Foldseek can cluster the entire known protein universe of over 214 million sequences by structural homology, generating functional hypotheses for previously unannotated proteins.

“AI methods enable us to look at complex biological systems and pull out all kinds of information flowing through those systems.”

NH: In my laboratory, we study the human brain, which has over 100 billion cells and over 10,000 genes expressed in each cell type. The data we generate is massive; we already use machine learning methods all the time, but we’re hoping newer AI-based methods will help us even more. In my experience as a scientist, methodological breakthroughs are extremely important, and the advent of AI is a big one.

However, people are rightly concerned about how accurate new AI tools will be and whether their conclusions will be valid. We have to work through this phase. We certainly wouldn’t want to not apply AI to modern, available datasets. I’m excited about the possibilities.

Will AI replace some of the roles or tasks of technicians or academic scientists?

JL: I’m not concerned about that at the current stage. These general foundational AI models have made substantial progress, but in biomedical research it is still hard to replace academic scientists and skilled technicians for two big reasons. One, foundation models are usually good at summarizing published literature but lack scientific insights and vision. And, two, AI methods give predictions, not results. And it still requires benchwork to validate predictions.

NH: AI will be one of many methods required to do effective science, so I don’t think it replaces anything. It updates efforts, such as bioinformatics and machine learning, to understand our data, but it still takes the same teams of people doing bench science to generate the data.

CB: At this point, a skilled person is still better than a machine at generating high-level analyses. Our goal for the future should be to find the right partnership between the human expert and the machine.

There’s a term—human-in-the-loop—that emphasizes the need for human input and expertise to improve a machine’s data analysis. You start by giving the machine annotated information and asking it to sort it out. It can do that—data comes back from the machine—but the experts then look more closely at what the machine has done. What did it do well? What did it miss? How did it sort the information? You can use that information to ask better questions, so that the machine can get better at providing valuable answers. That back and forth between skilled scientists and the machine enables the refinement of data into useful tools and understanding.

Can you give us an example of AI use in your laboratory?

CB: We are one of a number of labs now using AI methods to track animals at high-resolution in complex environments. We study tiny, low-contrast, fast-moving animals in noisy backgrounds. They have quite complex behaviors and are hard to follow. You could track them by hand, but it is so tedious. Our work utilizing AI now enables us to track many of them at once and gather high-quality data about their behavior. With the machine doing the tracking for us, the speed at which we can make discoveries is many times faster.

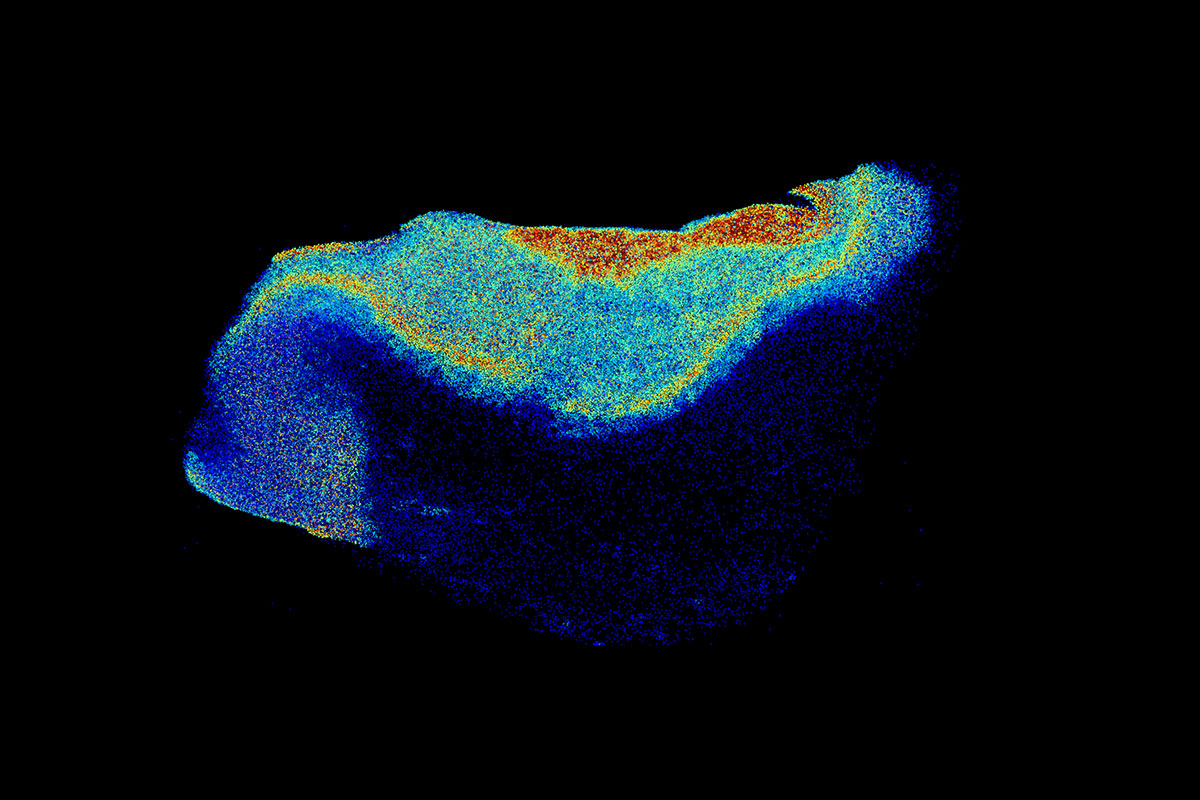

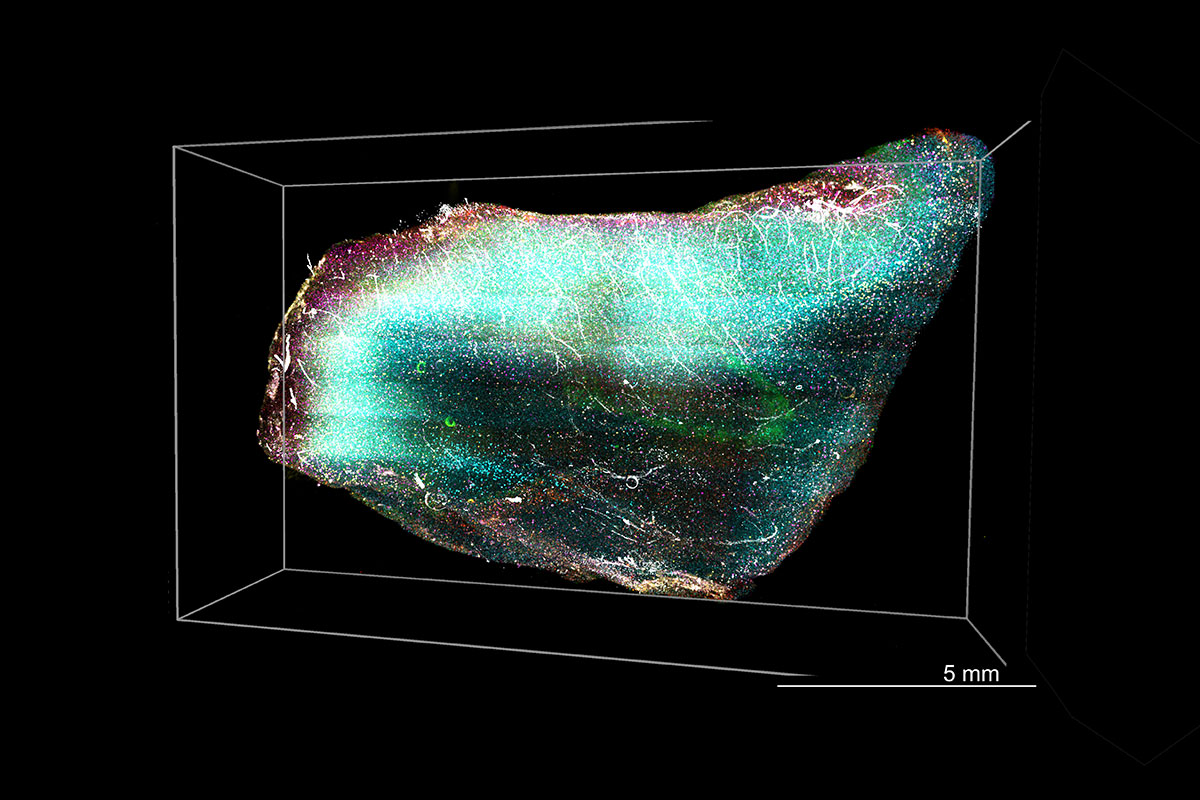

NH: When we are studying neurodegenerative diseases, it’s very important to know which cell types are being lost in any region of the brain. If I take a small 3D piece of the human brain, just two millimeters by one millimeter in size, there are many millions of neurons in there. We have generated methodologies in our lab for visualizing and labeling all those neurons, but counting them by eye or any other methodology is really challenging.

In collaboration with AI experts, we’ve developed the initial ability to count individual neurons. It is ongoing work that needs to be further refined, but it’s exciting because we should be able to detect even small changes in the occurrence of cell types very early in Huntington’s or Alzheimer’s disease brains.

We’re also using AI for the analysis of molecular data. To study a certain type of neuron, we’ll profile 20,000 cells of the same type, which means we have tens of thousands of bits of information. Analyzing these datasets, each of which has megabytes of data on thousands of genes, is taxing and difficult. We’re now trying to apply AI to those datasets to find out if they will actually reveal more than we’re able to discern using current methodologies.

JL: We are developing software called molecular docking that can quickly predict how small molecules interact with proteins. The software can help us search large chemical libraries and find small molecules that bind proteins of interest, with the goal of discovering new molecules for chemical biology and drug discovery.

Starting in 2016, chemical libraries grew from a couple million molecules to tens of billions of molecules. In the next year, I expect them to surpass one trillion molecules. With that amount of data, we need to increase our efficiency as we search that chemical space. For example, if you have a protein in mind as a therapeutic target, you want to quickly know what potential compounds from this library can effectively bind to that target. AI, especially deep learning methods, can help us speed up that search process.

As AI use has expanded, researchers have documented biased results from some algorithms based on the data fed into them. How can we maintain research integrity and reliability when using these models?

CB: You have to put good data in to get good information out. The primary reason AlphaFold was so successful was that it was trained on a very high-quality dataset, the protein structure information in the Protein Data Bank. The second reason was that there were competitions to validate early models and determine which were the best at prediction, and the field could then build on the most successful models. Based on that experience, scientific AI is currently laser-focused on two things: what the training data is going to be and how we validate the results.

JL: This is a challenge we are facing in biomedical research. To train an AI model, our field has low amounts of high-quality data available, compared to fields such as image recognition and natural language processing. Deep learning methods are data-hungry algorithms, so you need to feed a lot of data into them to have a reliable model. This is an area of ongoing research for computer science teams: how to make relatively accurate predictions with a smaller amount of data and how to gauge the model’s confidence in its prediction. The deep learning field is already building these sorts of tools.

NH: I’m a particular stickler about data quality because I think if the quality of a complex dataset isn’t high enough, you can easily be misled. We have pretty good methods for telling which of the actual datasets we collect are of high quality and which aren’t. For now, if we limit the analysis to our own high-quality laboratory data, then AI can help us analyze that data.

To non-experts, AI can seem like a black box. A lot of data goes in, then, through some magic, an answer comes out. Do AI models in biomedical sciences lack transparency? Can we verify and trust the results?

NH: I don’t really believe results unless they can be validated. If the AI program gives us some strange result which we have no way of validating, I think it will be of limited use for us. On the other hand, scientists know a lot about their topics. I’ve been studying the brain for 30 years, so I think my sense of what can be validated should be pretty good once we start to get results. Of course, every scientist has built-in ideas about what they think should happen, and we have to be careful about these biases as well. Maybe AI will help root out our own biases toward the data. That kind of cautious openness is a hallmark of great science.

“Scientific AI is currently laser-focused on two things: what the training data is going to be and how we validate the results.”

CB: Ten years ago, when people started using machine learning models in a large-scale way, they might put things in, get things out, and see that it looked pretty good, but they couldn’t figure out what the models were doing. A big advance in science occurred when we started to be able to crack open the hood. We can now look at intermediate steps and see what features the models pulled out, not just the end results. That is one aspect of trustworthiness, and that’s important from a scientific perspective: As a scientist, you can’t have a black box.

An even more basic aspect of trustworthiness is validation. In the lab, we might do experiments and use them to train a model. But we don’t use the same experiments to determine if the model is good. Instead, we use brand new, validated data that was gathered independently. Then we put that data in, knowing what results should come out of the model. You give the model a test.

JL: There are many ways to validate predictions from AI models, but it is hard to validate all of them, as they can throw out a lot of predictions. Even newly published models, some of which are trained in commercial companies and well-known labs, will still require quite a long period of time to realize their potential because experimental validation takes much longer than the predictions. My greatest fear for AI is that we may end up with a bunch of published AI models that will give you lots of predictions, but without any validation. People should consider these predictions carefully.

What’s your greatest hope for AI in the life sciences?

NH: Right now, we only have probes to detect signals in the brain that indicate the final stages of neurodegeneration. Ideally, we could use AI-based drug design to create probes that could be used in living human beings to access the earliest stages of neurodegeneration and substance abuse, and watch them progress in precise detail.

I can imagine a world where AI generates enough information to create a whole new panoply of different probes and methodologies that would enable scientists to really tell us precisely what is happening in the brain during illness, so we can learn to design new interventions.

CB: There is a lot of excitement around the idea of using AI methods to decode complex relationships in medicine and neuroscience. In translational neuroscience, there are some astonishing uses of AI, like reading brain signals from paralyzed patients with ALS, transforming those signals into speech, and allowing them to talk. These are still rare examples that require invasive brain surgery and massive resources, but they show the promise of AI to alleviate human suffering. Ideally, I can imagine AI methods putting together many complex pieces of information from a patient someday. We could see not just symptoms, but also a long-term view of the individual that includes the person’s genome and environmental exposures, the trajectory of their blood markers (not just “normal” or “abnormal”), and their past medical history—and come to a diagnosis and treatment plan. For psychiatric disorders, for example, we are treating very complicated aspects of an individual, and we need to have the individual in mind. There is exciting potential in the future to work with the complexity and heterogeneity of human data to inform medicine.

JL: After the Nobel Prizes for AI last year, I’m optimistic that a lot of young scientists will go into this area and develop new architectures for the application of AI to the sciences. Additionally, as people start to consider what is needed to get the most out of AI models, bench scientists are thinking about how to generate more high-quality data for these data-hungry algorithms, so that area is flourishing. There are so many reasons to have great hope for the use of AI in the biomedical sciences.